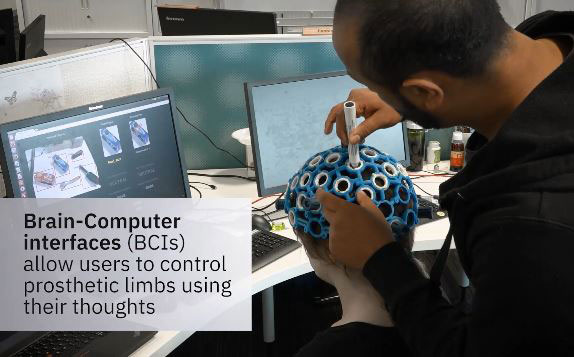

Prosthetic devices are getting smarter all the time. They are enabling people with serious injuries or amputations to live independently. IBM researchers have recently come up with a brain-machine interface that uses AI to enhance prosthetic limbs. They used deep-learning algorithms to decode activity intentions using data from an EEG system and had a robotic arm execute the task.

More items like this: here

The robotic arm was linked to a camera and deep learning framework called GraspNet. As IBM explains, the system:

outperforms state of the art deep learning models in terms of grasp accuracy with fewer parameters, a memory footprint of only 7.2MB and real time inference speed on an Nvidia Jetson TX1 processor.

[HT]

*Disclaimer*Our articles may contain affiliate links. As an Amazon Associate we earn from qualifying purchases. Please read our disclaimer on how we fund this site.